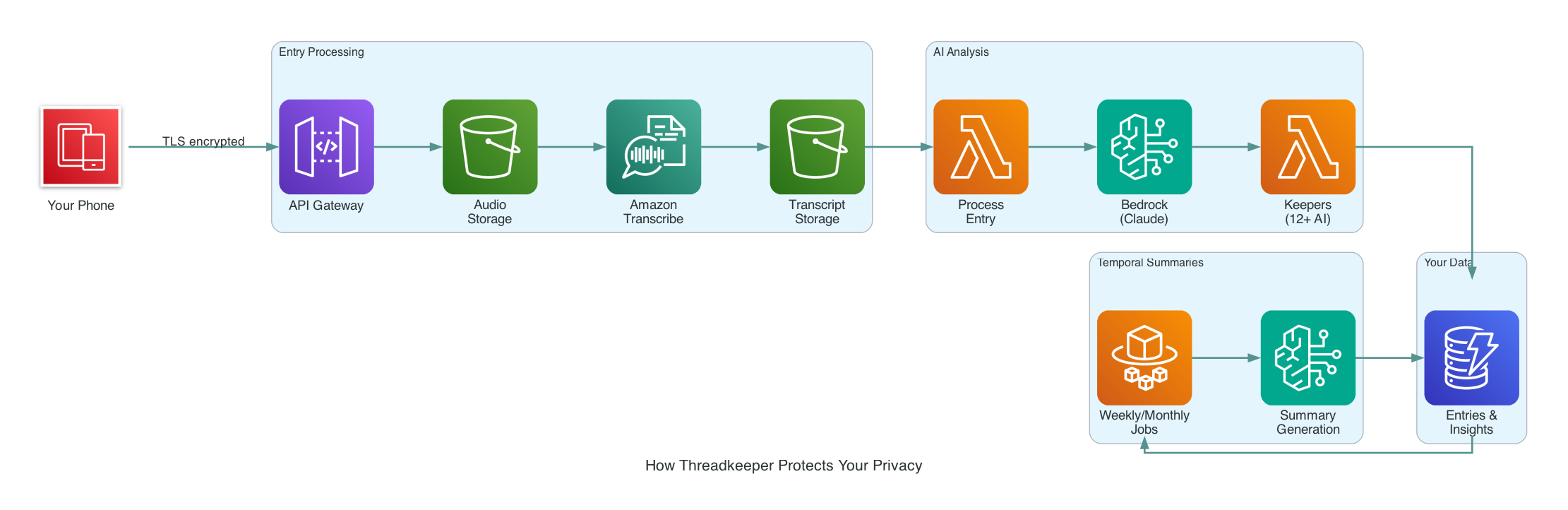

How Threadkeeper Protects Your Privacy

· Jess SzmajdaYour journal entries are private and encrypted. But what does that actually mean? Where does your data go? Who can access it? These are exactly the questions you should ask any app that handles your inner life.

This post walks through the entire journey of your voice from the moment you tap record to the AI-generated summaries that help you see your own patterns.

The Journey of Your Voice

When you record a voice entry in Threadkeeper, here's what happens:

1. Recording on Your Phone

The audio stays on your device until upload. If you're offline, it waits. You can control whether uploads happen over cellular data or only on Wi-Fi - once you're online, entries upload automatically.

2. Encrypted Upload

When you upload, your audio travels over TLS (the same encryption banks use for online transactions) to Amazon S3. The file is stored in a bucket that uses AWS Key Management Service (KMS) encryption - the same encryption system validated to FIPS 140-3 standards that U.S. federal agencies and the Department of Defense use to protect classified information. The bucket is configured to reject any unauthenticated connections.

3. Transcription

Amazon Transcribe converts your speech to text. This happens entirely within AWS's network. The resulting transcript is stored in another KMS-encrypted S3 bucket.

4. AI Processing

Here's where your entry transforms from raw transcript into a Clarified Summary. A Lambda function reads your transcript and sends it to Amazon Bedrock, where Anthropic's Claude models:

- Clarify and clean up the transcript (removing "um"s and false starts)

- Extract key topics and themes

- Generate a summary of what you talked about

All of this stays on AWS's network. Bedrock runs the AI models inside AWS's infrastructure - your data never leaves their controlled environment.

5. Keeper Analysis

Threadkeeper has specialized AI "keepers" that look at your entries through different lenses - Spirit, Shadow, Mind, Body, Work, and more. Each keeper processes your entry to surface different patterns and insights. This also runs through Bedrock with Claude models.

6. Temporal Summaries

Daily scheduled jobs (running on AWS Fargate containers) look across your recent entries to generate weekly, monthly, seasonal, and yearly summaries. These roll up your individual thoughts into narratives that help you see trends over time.

What Gets Encrypted Where

Let me be specific about the encryption:

| Component | Encryption |

|---|---|

| Phone to API | TLS 1.2+ (in transit) |

| Audio files in S3 | KMS at rest, enforceSSL in transit |

| Transcripts in S3 | KMS at rest, enforceSSL in transit |

| DynamoDB tables | KMS at rest |

| Lambda to Bedrock | AWS internal network (encrypted) |

| Fargate to Bedrock | AWS internal network (encrypted) |

AWS KMS (Key Management Service) handles all the encryption keys. KMS uses hardware security modules validated to FIPS 140-3 Security Level 3 - the same standard required for U.S. government systems handling sensitive data. Keys are rotated automatically. Everything is encrypted both when stored (at rest) and when moving between services (in transit).

Who Can Access What

This is where I want to be completely honest.

Amazon Web Services: AWS operates the infrastructure. They can theoretically access anything running on their systems, though their business model depends on not doing so. They maintain extensive compliance certifications including SOC 2, HIPAA eligibility, FedRAMP, and 140+ other security standards that are independently audited.

Amazon Transcribe: Processes your audio to create transcripts. By default, AWS can use Transcribe content to improve the service - but I've opted out of this via AWS Organizations policy, so your audio is never used for training.

Amazon Bedrock (Anthropic Claude): The AI that processes your entries. Bedrock has a critical guarantee: your data is never used to train models. This isn't just a policy - it's architecturally enforced. Your prompts and outputs are encrypted, processed, and discarded. Model providers (like Anthropic) have no access to the accounts where models run - they deliver the model to AWS and that's it. Anthropic never sees your data.

Me: Here's the honest gap. I have access to the KMS encryption keys because I deploy and maintain the infrastructure. I could theoretically look at your data. I don't, and I won't, but that requires trusting me.

Why Not Run Everything Locally?

Running AI entirely on your device would be the most private option - your data would never leave your phone. But current on-device models aren't capable enough to generate the quality of summaries and insights that make Threadkeeper useful.

So there's a trade-off: you're trusting AWS and me in exchange for AI capabilities that require cloud processing. I think that's a reasonable trade for most people, but I want you to understand it clearly.

What I'm Working On

I'm exploring ways to add customer-managed encryption - a system where you hold a key that I don't have access to. Some options:

- Firebase's cryptographic attestation

- Client-side encryption before upload

- Zero-knowledge key derivation from your login credentials

None of these are simple to implement while keeping the app usable, but I'm committed to finding a path where even I can't access your data if you don't want me to.

The Architecture

Here's a simplified view of how the pieces fit together:

Your data flows from left to right: phone to S3 to transcription to AI processing to your summaries. Every arrow represents an encrypted connection. Every box represents encrypted storage.

Questions?

If you have questions about how Threadkeeper handles your data, I'm happy to answer them. Reach out at hello@threadkeeper.app.

Your inner life deserves transparency about how it's protected.